My Personal AI

on My Personal Supercomputer

NVIDIA describes the NVIDIA DGX Spark as “A Grace Blackwell AI supercomputer on your desk.” Aible’s founding mission was - “I am Aible!” empowering everyone to be able to build their personal custom AI for their unique needs on their unique data in minutes. A personal supercomputer seemed like a massive potential force multiplier for our mission. Eager to explore these possibilities, Aible dove in to experience firsthand how DGX Spark could help turn this vision into reality.

The NVIDIA DGX Spark specifications are quite exciting, given the Aible team has previously experienced massive performance gains from end-to-end agents running on NVIDIA Grace Hopper and NVIDIA Grace Blackwell architectures. In fact, in collaboration with the NVIDIA AI Aerial team, Aible previously published a benchmark on how even simple end-to-end agents run much faster on such converged architectures. The benefit comes from running everything end-to-end - from the models, to agent coordination, to tools and data infrastructure - on the same integrated processor at the same time. This completely avoids asynchronous communication between the agent components and in most cases doesn't even have to write anything to disk to enable the agent to execute end-to-end.

Aible is very often used for Agentic Analytics work - where it automatically looks at millions of variable combinations to find key hidden insights in the data. For example, for a Fortune 500 retailer, Aible automatically analyzes how prices and transaction volumes are changing across stores, SKUs, customer types, etc. to immediately identify how their business is changing in these turbulent times. The Aible Information Model, that automatically evaluates billions of rows of data across millions of variable combinations, is built using highly parallelizable algorithms that greatly benefit from the NVIDIA Grace processor's high bandwidth Scalable Coherency Fabric for inter-chip communication and advanced Single Instruction Multiple Data (SIMD) capabilities. Aible has already proven this approach on the NVIDIA Grace Hopper and larger NVIDIA Grace Blackwell platforms, so we had reason to believe we would see similar benefits from the NVIDIA DGX Spark.

But the NVIDIA DGX Spark was just so small! Could it really handle the expansive work Aible wanted to throw at it? Turns out it could. We were eager to try many different workloads on this small but mighty system. For larger projects, it is possible to connect two NVIDIA DGX Spark systems together using NVIDIA ConnectX networking in order to run different models on different NVIDIA DGX Spark systems or span larger models across multiple DGX Spark systems.

Aible tested 48 different use cases on the NVIDIA DGX Spark - across the following types of workloads:

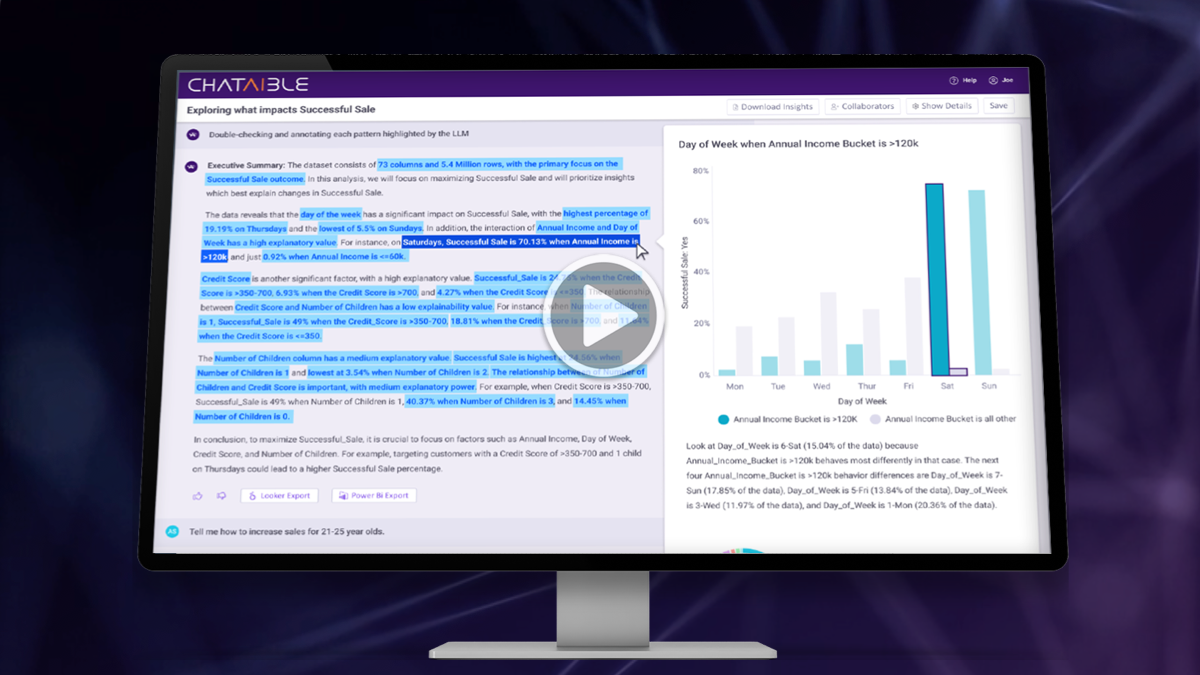

- Agentic analytics that automatically finds insights in the data. To perform well for these use cases, NVIDIA DGX Spark would need to be able to handle highly parallelized models in its NVIDIA Grace CPU while handling language models efficiently in the NVIDIA Blackwell GPU and be able to communicate information efficiently between the two parts. Two variants of such use cases were tested - ones that detect the hidden drivers in the data (for example, what drives sales conversions or patient readmissions) vs. what drives changes in data over time (for example, how have my sales patterns changed this week vs. last week or this quarter vs. the same quarter last year).

- Reasoning models for Natural Language Querying that can be live adjusted based on end-user feedback. This use case assesses how well the Aible Intern Reasoning Models based on Meta Llama performed on NVIDIA DGX Spark; and how well the models adjusted live based on end-user feedback. The performance for this would mostly depend on the speed with which the agent coordination on the Grace CPU would interact with the language model running on the Blackwell GPU.

- Autonomous agents using tools. When the Agent Autonomy setting is set to “High,” Aible generates autonomous agents based on the user’s request. Such agents leverage the NVIDIA NeMo Agent Toolkit to access Aible data preparation, augmented analytics and other tools, access other Aible agents as tools, etc. These agents used the Aible Intern model for this use case. The performance for this use case would mostly depend on the speed with which the agent coordination on the Grace CPU would interact with the language model running on the Blackwell GPU and the tools running on the Grace CPU. So the speed of interaction between the different components would be key.

- Standard unstructured Retrieval Augmented Generation (RAG) use cases. This use case required a lightweight vector database to be brought up on demand on the Grace CPU and there were several interactions back and forth between the agent coordination and vector database on the Grace CPU and the language model on the Blackwell GPU.

Running these use cases on NVIDIA DGX Spark also has significant data security benefits. Every component here runs entirely on the single NVIDIA DGX Spark system without relying on any external resources. The only external connection required was remote access because Aible was using the NVIDIA DGX Spark system remotely from the NVIDIA Launchpad. As such, all these use cases could run entirely air-gapped if desired on a physical NVIDIA DGX Spark system. To add additional capabilities and increase model sizes multiple NVIDIA DGX Spark systems could easily be connected together.

NVIDIA DGX Spark crushed these workloads - especially the workloads for agentic analytics. Just doing automated analytics without using language models was extremely snappy. The parallelization possible across the different Grace CPUs was really evident. The only slow part of the agentic use cases was the language model itself, but that should be addressed as the NIM microservices are released. We believe this was because of what Aible has experienced previously with such architectures - all the components of the agent communicating synchronously on the same piece of silicon beats larger models and agent components communicating asynchronously over the cloud. This is a common pattern in IT - the pendulum swings between massively complex and large systems and simple integrated performant systems that are ‘good enough’ for what the user actually needs to do. Sure a Cray supercomputer is powerful, but for ease of use, I prefer my pre-tested easy to use iPad, thank you very much. All NVIDIA DGX Spark needs now is a comprehensive set of end-user facing apps and an app marketplace like what Apple built for the iPad. And that is what Aible is focusing on.

Watch the video showing us going through each of the workload types. We are going to benchmark each of the workloads once we have the NIM microservices, but we have absolutely no hesitation in saying that NVIDIA DGX Spark opens up completely new possibilities for personal AI.