How to Eliminate Bias and Ensure Fairness in AI.

How to Eliminate Bias and Ensure Fairness in AI.

Eliminating bias in AI has emerged as one of the most important challenges in technology. With AI increasingly being used to make important decisions in areas such as hiring, college admissions, medicine, and bank lending, it’s critical that these decisions don’t discriminate based on race, gender, age, or other factors.

The stakes are high. A Gartner report predicts that through 2022, 85 percent of AI projects will deliver erroneous outcomes due to bias in data, algorithms, or the teams responsible for managing them. Bias in AI leads to unfair outcomes, the perpetuation of systemic racism, and serious reputational damage and costly failures for businesses.

Unfortunately, many of the approaches that aim to mitigate AI bias don’t fix the problem. On the contrary, they often perpetuate bias and make it even harder to root out. Reactively solving these problems via best practices and governance is unlikely to scale. We need to solve them by design.

Consider this example in bank lending. If historically, a bank has not lent money to certain racial groups or genders as often as others, and the AI trains on that historical data, the recommendations of the AI will perpetuate the bias. That’s because AI learns only from data. If there’s bias inherent in your data, that will become further institutionalized when you use it to train your AI.

The conventional “solution” to this problem is to remove the race or gender variable from the AI so that it can’t directly consider those factors. Problem solved, right?

Wrong.

AI Bias is Often “Baked-In”

Even if you remove the race or gender variable from the data set, the AI will search for proxies for those variables. If women had a lower approval rate for loans, for example, then high school teachers will have a lower rate, because high school teachers are disproportionately female. If men had a higher rate of loan approval, then construction workers will have a higher rate, because construction workers are disproportionately male.

If you remove race as a variable in the data set, the AI may look at the loan applicant’s zip code and use that as a proxy to determine race. A person’s last name or the school they attended can give the AI clues about race, ethnicity, and gender. Even seemingly neutral terms can be used by AI as proxies for race or gender. Amazon stopped using a hiring algorithm after finding it favored applicants based on words like “executed” or “captured” that were more commonly found on men’s resumes.

AI is very good at finding hidden patterns – that’s what it’s designed to do. Just because you remove a potentially troublesome variable, that doesn’t mean the AI won’t pick up other variables that are highly correlated. Even after you remove the problematic variable, the bias is still perpetuated, but in an even more subtle way. And there’s a limit to how many variables you can remove before the data no longer contains enough useful information to train AI models.

Remove AI Bias by Defining Fairness

There's a better way to remove bias in AI.

Think about training AI the way you would train a child. The best way to train a child isn’t simply to tell them “no” every time they do something wrong. It’s far more effective to set up guidelines and general principles of behavior so that the child can use them to figure out what to do for themselves. Occasionally, the child will still get things wrong, so you still keep an eye on them. But you proactively teach the child how to behave rather than correcting bad behavior after the fact.

AI should be trained in a similar way. The way to eliminate bias in AI is not to continually tell it “no” by removing potentially problematic variables. The best way to eliminate AI bias is to define fairness up front and to train the AI to achieve that objective. A fairness objective in the case of bank lending might be that the loan approval rate for women be the same as it is for men. Or it might be that the proportion of women whose loans are approved should be in line with the proportion of women in the community. However you define fairness – and there can be many ways of doing that – the AI can be trained to guide you toward that definition.

But remember in the case of training a child, even though we give them general guidelines to follow, we still keep an eye on them. The same should be true for training AI. After establishing our principles of fairness and training the AI to follow those objectives, we monitor the AI on an ongoing basis to make sure that it’s following our guidelines. If the AI strays from those objectives, we adjust the models accordingly.

Balancing Fairness and Business Objectives

Of course, there are various ways of defining fairness. In the example of a bank loan, you might want an approval rate to be equal across different races or genders. Or you might decide to have the loan rejection rate to be equal across races and genders. Or you may want the portfolio of people whose loans are approved to look the same as the portfolio of loan applicants. These are all different definitions of fairness.

But if I tell a data scientist up front to create a that would be fair, that’s extremely hard to do. What a data scientist ends up doing is create a good model and then start constraining it to introduce fairness to that model. If the loan acceptance rate is too low for a certain race, the data scientist lowers the threshold, so that for certain races, you’ll accept loans as long as the score is 20, but for another race, the acceptable score is 25. You’re trying to differentiate between different races in your AI, but by simply changing the threshold, you’re using a hammer to fix the problem.

What you want is to create the best possible AI for each race or gender that collectively delivers on your definition of fairness that you’ve determined up front. You can only do this in the world of serverless, where you have many models available. You can choose from those many models to achieve your overall fairness goal, so that the right model is used for the right subgroup. That way, you achieve fairness across the board without constraining your AI.

One of the biggest problems with fairness in AI right now is that the concept of fairness is in competition with business objectives. The prevailing notion is that you either make money or you’re fair. This mindset is very dangerous and destructive.

This is the same mindset we saw in the early days of COVID, when many people believed that we faced a binary choice between lives or livelihoods. But we’ve learned that it’s not as simple as that because you care about both. You have to balance.

It’s the same with fairness and AI. When you take an AI model and constrain it to be fair, you’re implicitly trading off business outcome for fairness. In the world of serverless, you don’t have to make that tradeoff. You have so many models to choose from that you can have much more complex goals. If your business objective is to increase your revenue by 15%, you can have the AI balance that objective with your definitions of fairness and choose the optimal model. You can balance your twin objectives of revenue growth and fairness. Those objectives are no longer in competition, they’re working together. You’re not limited to one model on a server. You get to choose from hundreds or thousands of models that are deployed in serverless.

Instead of fairness being in competition with your business objectives, fairness becomes an important business objective in itself. And rather than reacting to bias after the fact, your AI has fairness baked in from the start. Instead of trying to correct AI bias after the fact, we can use AI proactively to be an enabler of fairness. As with many disruptive technologies, what initially looks like a problem can turn out to be a strength.

Ensure AI Fairness and Balance Business Objectives with Aible

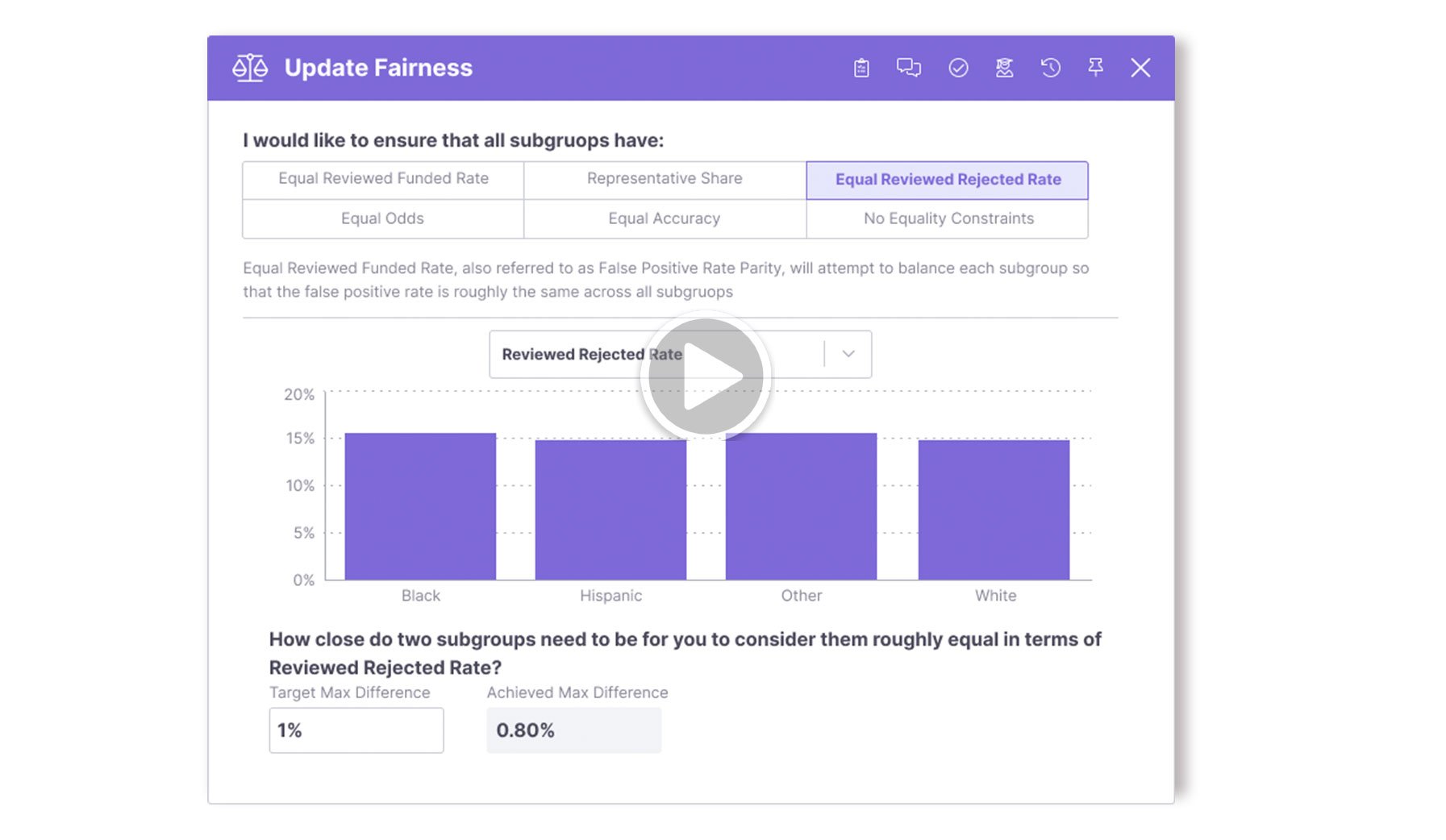

With Aible, enterprises can eliminate bias and ensure fairness with AI by defining fairness objectives up front. Aible’s serverless-first approach enables you to choose the optimal models from a portfolio of many models so that you optimally balance fairness and your business objectives and always choose the right model at the right time. You proactively avoid bias and ensure fairness up front rather than reacting to it after the fact and still not solve the underlying bias.

See how Aible addresses bias in this short demonstration:

.